StarCoder: your local coding assistant

Microsoft CoPilot has brought about a revolution in the field of software development. This AI assistant greatly helps developers with various coding tasks, making their lives easier. However, one drawback is that it isn’t a standalone application but rather a cloud-based service. This means that users must agree to the terms and conditions of service and pay for a subscription.

Fortunately, the world of open-source software provides us with numerous alternatives. As of the time of writing this article, the most notable alternative to CoPilot is StarCoder, developed by the BigCode project. StarCoder is an extensive neural network model with 15.5B parameters, trained on over 80 programming languages.

This model is distributed on Hugging Face (HF) using a gated model under the BigCode OpenRAIL-M v1 license agreement. You can download and use this model for free, but you need to have an HF account with a linked SSH key. Before you can download, there are a few additional steps you need to take.

Add SSH key to HF

Before starting, you need to set up port forwarding (remote port 7860 to 127.0.0.1:7860) in your SSH-client. You can find additional information in the following articles:

Update the package cache repository and installed packages:

sudo apt update && sudo apt -y upgradeLet’s install Python’s system package manager (PIP):

sudo apt install python3-pip

Generate and add an SSH-key that you can use in Hugging Face:

cd ~/.ssh && ssh-keygenWhen the keypair is generated, you can display the public key in the terminal emulator:

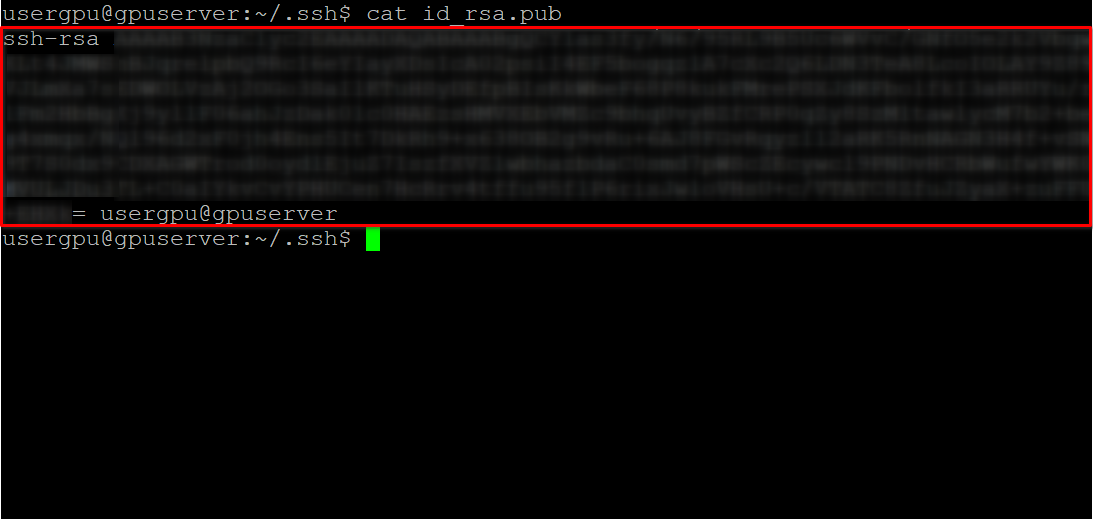

cat id_rsa.pubCopy all information starting from ssh-rsa and ending with usergpu@gpuserver as shown in the following screenshot:

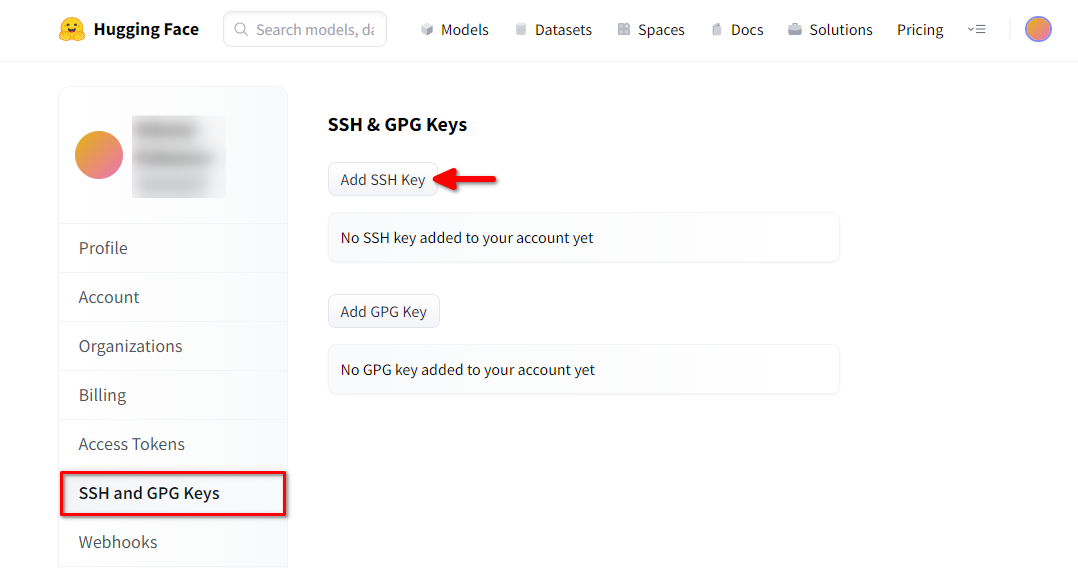

Open a web browser, type https://huggingface.co/ into the address bar and press Enter. Log into your HF-account and open Profile settings. Then choose SSH and GPG Keys and click on the Add SSH Key button:

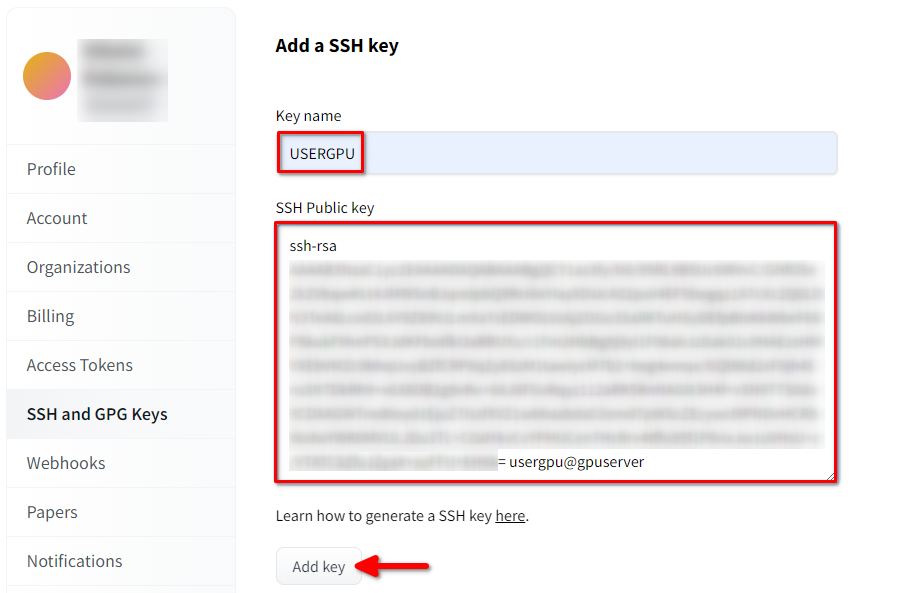

Fill in the Key name and paste the copied SSH Public key from the terminal. Save the key by pressing Add key:

Now, your HF-account is linked with the public SSH-key. The second part (private key) is stored on the server. The next step is to install a specific Git LFS (Large File Storage) extension, which is used for downloading large files such as neural network models. Open your home directory:

cd ~/Download and run the shell script. This script installs a new third-party repository with git-lfs:

curl -s https://packagecloud.io/install/repositories/github/git-lfs/script.deb.sh | sudo bashNow, you can install it using the standard package manager:

sudo apt-get install git-lfsLet’s configure git to use our HF nickname:

git config --global user.name "John"And linked to the HF email account:

git config --global user.email "john.doe@example.com"Download the model

Please note that StarCoder in binary format may take up a significant amount of disk space (>75 GB). Don’t forget to refer to this article to ensure you’re using the correct mounted partition.

Everything is ready for the model download. Open the target directory:

cd /mnt/fastdiskAnd start downloading the repository:

git clone git@hf.co:bigcode/starcoderThis process takes up to 15 minutes. Please be patient. You can monitor this by executing the following command in another SSH-console:

watch -n 0.5 df -hHere, you’ll see how the free disc space on the mounted disc is reduced, ensuring that the download is progressing and the data is being saved. The status will refresh every half-second. To manually stop viewing, press the Ctrl + C shortcut.

Run the full model with WebUI

Clone the project’s repository:

git clone https://github.com/oobabooga/text-generation-webui.gitOpen the downloaded directory:

cd text-generation-webuiExecute the start script:

./start_linux.sh --model-dir /mnt/fastdiskThe script will check for the presence of the necessary dependencies on the server. Any missing dependencies will be installed automatically. When the application starts, open your web browser and type the following address:

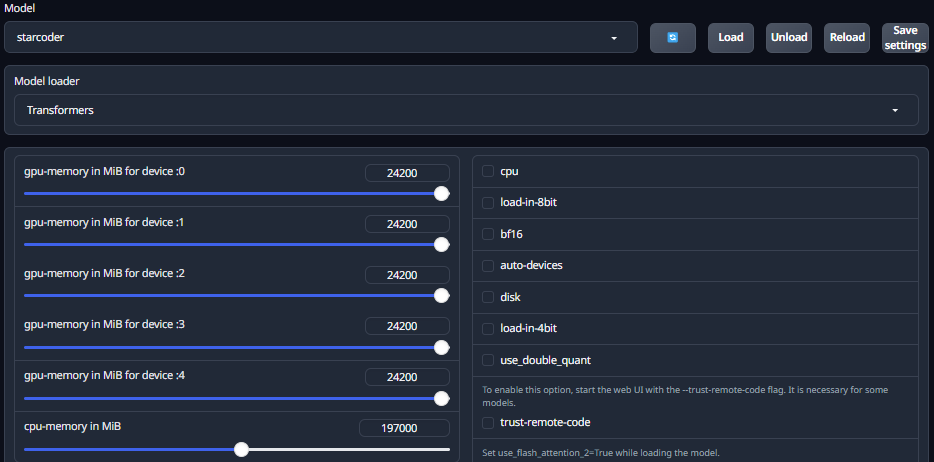

http://127.0.0.1:7860Open the Model tab and select the downloaded model starcoder from the drop-down list. Click on the Model loader list and choose Transformers. Set the maximum GPU-memory slider for each installed GPU. This is very important, as setting it to 0 restricts the use of VRAM and prevents the model from loading correctly. You also need to set the maximum RAM usage. Now, click the Load button and wait for the loading process to complete:

Switch to the Chat tab and test the conversation with the model. Please note that Starcoder isn’t intended for dialogue like ChatGPT. However, it can be useful for checking code for errors and suggesting solutions.

If you want to get a full-fledged dialogue model, you could try two other models: starchat-alpha and starchat-beta. These models were fine-tuned to conduct a dialogue just like ChatGPT does. The following commands helps to download and run these models:

For starchat-alpha:

git clone git@hf.co:HuggingFaceH4/starchat-alphaFor starchat-beta:

git clone git@hf.co:HuggingFaceH4/starchat-betaThe loading procedure is the same as described above. Also, you can find C++ implementation of starcoder, which will be effective for CPU inference.

See also:

Updated: 04.01.2026

Published: 17.01.2025