Your own Vicuna in Linux

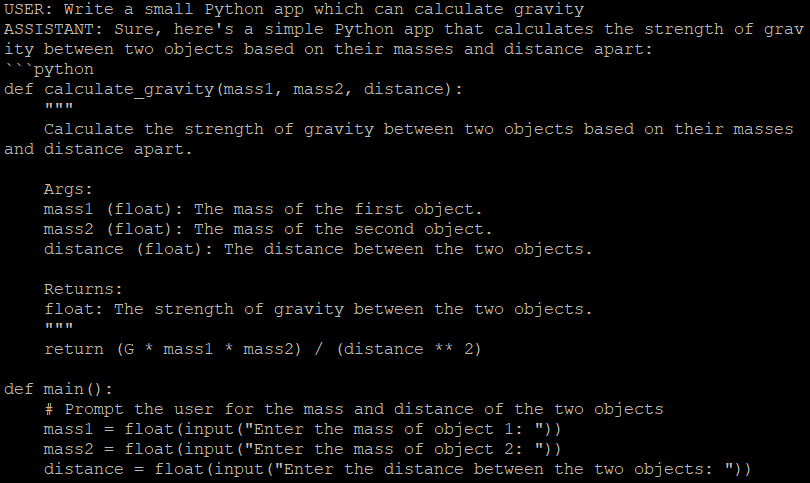

This article will guide you through the process of deploying a basic LLaMA alternative on a LeaderGPU server. We will utilize the FastChat project and the freely available Vicuna model for this purpose.

The model we'll be using is based on Meta's LLaMA architecture but has been optimized for efficient deployment on consumer hardware. This setup provides a good balance between performance and resource requirements, making it suitable for both testing and production environments.

Preinstallation

Let’s prepare to install FastChat by updating the packages cache repository:

sudo apt update && sudo apt -y upgradeInstall NVIDIA® drivers automatically using the following command:

sudo ubuntu-drivers autoinstallYou can also install these drivers manually with our step-by-step guide. Then, reboot the server:

sudo shutdown -r nowThe next step is to install PIP (Package Installer for Python):

sudo apt install python3-pipInstall FastChat

From PyPi

There are two possible ways to install FastChat. You can install it directly from PyPi:

pip3 install "fschat[model_worker,webui]"From GitHub

Alternatively, you can clone the FastChat repository from GitHub and install it:

git clone https://github.com/lm-sys/FastChat.gitcd FastChatDon’t forget to upgrade PIP before proceeding:

pip3 install --upgrade pippip3 install -e ".[model_worker,webui]"Run FastChat

First start

To ensure a successful initial launch, it’s recommended to manually call FastChat directly from the command line:

python3 -m fastchat.serve.cli --model-path lmsys/vicuna-7b-v1.5This action automatically retrieves and downloads the designated model of your choice, which should be specified using the --model-path parameter. The 7b represents a model with 7 billion parameters. This is the lightest model, suitable for GPUs with 16 GB of video memory. Links to models with a larger number of parameters can be found in the project’s Readme file.

Now you have the option to engage in a conversation with the chatbot directly within the command line interface or you can set up a Web interface. It contains three components:

- Controller

- Workers

- Gradio web server

Set up services

Let’s transform each component into a separate systemd service. Create 3 separate files with the following contents:

sudo nano /etc/systemd/system/vicuna-controller.service[Unit]

Description=Vicuna controller service

[Service]

User=usergpu

WorkingDirectory=/home/usergpu

ExecStart=python3 -m fastchat.serve.controller

Restart=always

[Install]

WantedBy=multi-user.targetsudo nano /etc/systemd/system/vicuna-worker.service[Unit]

Description=Vicuna worker service

[Service]

User=usergpu

WorkingDirectory=/home/usergpu

ExecStart=python3 -m fastchat.serve.model_worker --model-path lmsys/vicuna-7b-v1.5

Restart=always

[Install]

WantedBy=multi-user.targetsudo nano /etc/systemd/system/vicuna-webserver.service[Unit]

Description=Vicuna web server

[Service]

User=usergpu

WorkingDirectory=/home/usergpu

ExecStart=python3 -m fastchat.serve.gradio_web_server

Restart=always

[Install]

WantedBy=multi-user.targetSystemd usually updates its daemons database during the system's startup process. However, you can do this manually using the following command:

sudo systemctl daemon-reloadNow, let’s add three new services to the startup and immediately launch them using the --now option:

sudo systemctl enable vicuna-controller.service --now && sudo systemctl enable vicuna-worker.service --now && sudo systemctl enable vicuna-webserver.service --nowHowever, if you attempt to open a web interface at http://[IP_ADDRESS]:7860, you’ll encounter a completely unusable interface with no available models. To resolve this issue, stop the Web interface service:

sudo systemctl stop vicuna-webserver.serviceExecute the web service manually:

python3 -m fastchat.serve.gradio_web_serverAdd an authentication

This action calls another script, which will register the previously downloaded model in a Gradio internal database. Wait a few seconds and interrupt the process using the Ctrl + C shortcut. We’ll also take care of security and activate a simple authentication mechanism for accessing the web interface. Open the following file if you installed FastChat from PyPI:

sudo nano /home/usergpu/.local/lib/python3.10/site-packages/fastchat/serve/gradio_web_server.pyor

sudo nano /home/usergpu/FastChat/fastchat/serve/gradio_web_server.pyScroll down to the end. Find this line:

auth=auth,

Change it by setting any username or password whichever you want:

auth=(“username”,”password”),Save the file and exit, using Ctrl + X shortcut. And finally start the web interface:

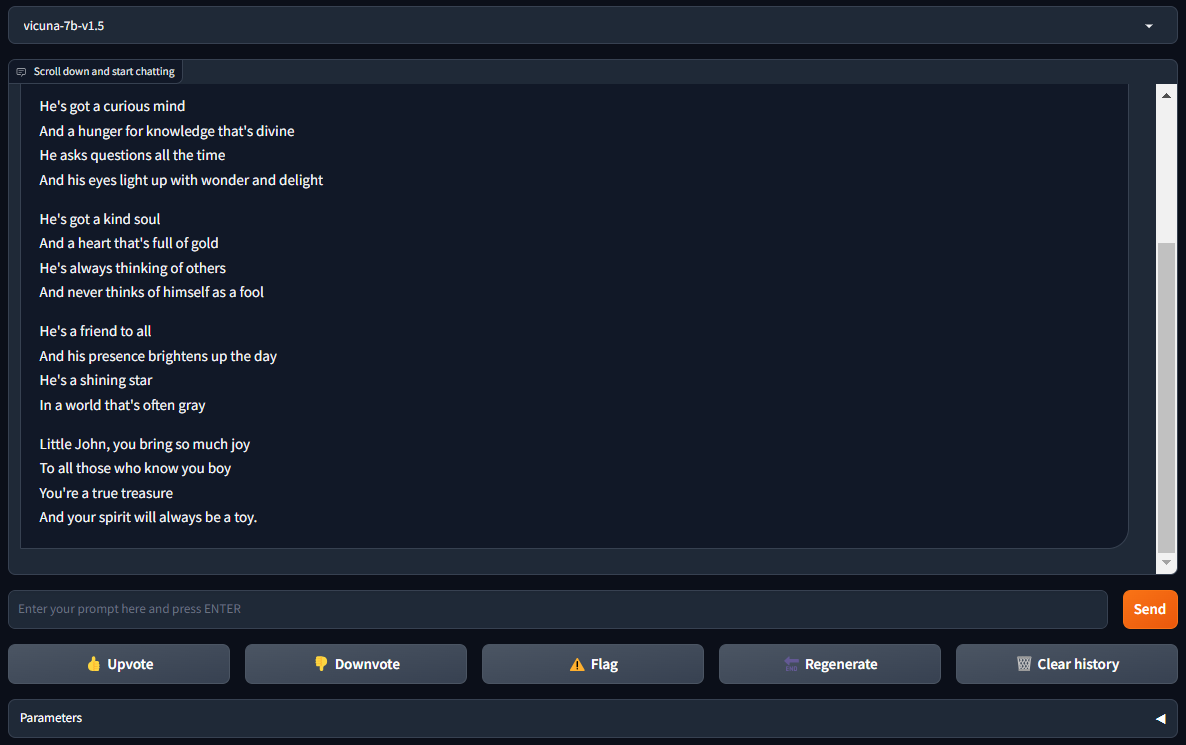

sudo systemctl start vicuna-webserver.serviceOpen http://[IP_ADDRESS]:7860 in your browser and enjoy FastChat with Vicuna:

See also:

Updated: 04.01.2026

Published: 20.01.2025