AlexNet GPU

LeaderGPU® services geared towards changing GPU-computing market game rules. Distinctive LeaderGPU® characteristics demonstrates the astonishing speed of calculations for the Alexnet model - 2.3 times faster than in Google cloud, and 2.2 times faster than in the AWS (data is given for 8x GTX 1080). The cost of per-minute lease of the GPU in LeaderGPU® starts from 0.02 euros, which is 4.1 times lower than in Google Cloud, and 5.35 times lower than in AWS (as of July 7, 2017).

Throughout this article we will provide test results for the Alexnet model in services such as LeaderGPU®, AWS and Google Cloud. You will understand why LeaderGPU® is a preferable choice for all GPU-computing needs.

All considered tests were carried out using python 3.5 and Tensorflow-gpu 1.2 on machines with GTX 1080, GTX 1080 TI and Tesla® P 100 with CentOS 7 operating system installed and CUDA® 8.0 library.

The following commands were used to run the test:

# git clone https://github.com/tensorflow/benchmarks.git# python3.5 benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=?(Number of cards on the server) --model alexnet --batch_size 32 (64, 128, 256, 512)GTX 1080 instances

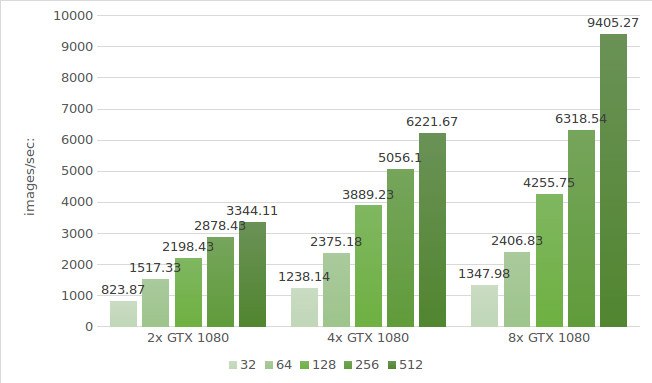

The first testing of the Alexnet model will be performed with the instances of the GTX 1080. Testing environment data (with batch sizes 32, 64, 128, 256 and 512) is provided below:

Testing environment:

- Instance types:ltbv17, ltbv13, ltbv16

- GPU: 2x GTX 1080, 4x GTX 1080, 8x GTX 1080

- OS:CentOS 7

- CUDA / cuDNN:8.0 / 5.1

- TensorFlow GitHub hash:b1e174e

- Benchmark GitHub hash:9165a70

- Command:

# python3.5 benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=2 (4,8) --model alexnet --batch_size 32 (optional 64, 128,256, 512) - Model:Alexnet

- Date of testing:June 2017

The test results are shown in the diagram below:

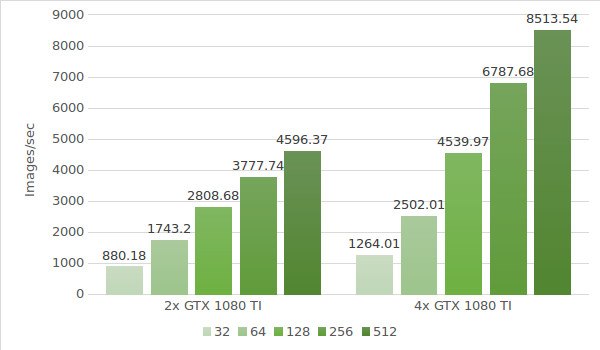

GTX 1080TI instances

Next step is testing the Alexnet model with the instances of the GTX 1080TI. Testing environment data (with the batch sizes 32, 64, 128, 256 and 512) is provided below:

- Instance types:ltbv21, ltbv18

- GPU:2x GTX 1080TI, 4x GTX 1080TI

- OS:CentOS 7

- CUDA / cuDNN:8.0 / 5.1

- TensorFlow GitHub hash:b1e174e

- Benchmark GitHub hash:9165a70

- Command:

# python3.5 benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=2 (4) --model alexnet --batch_size 32 (optional 64, 128,256, 512) - Model:Alexnet

- Date of testing:June 2017

The test results are shown in the diagram below:

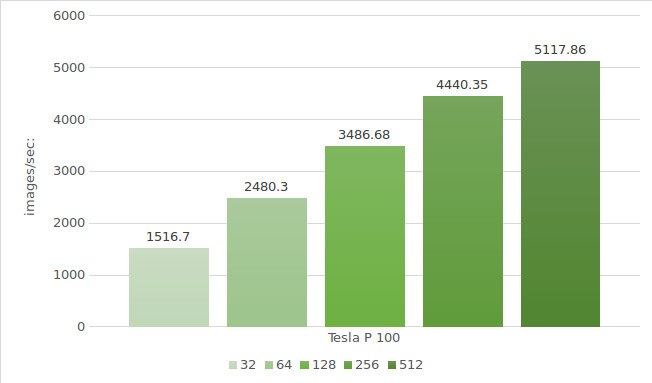

Tesla® P100 instance

Finally, it's time to test the Alexnet model with the Tesla® P100 instances. The testing environment (with batch sizes 32, 64, 128, 256 and 512) will look like this:

- Instance type:ltbv20

- GPU:2x NVIDIA® Tesla® P100

- OS:CentOS 7

- CUDA / cuDNN:8.0 / 5.1

- TensorFlow GitHub hash:b1e174e

- Benchmark GitHub hash:9165a70

- Command:

# python3.5 benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py --num_gpus=2 --model alexnet --batch_size 32 (optional 64, 128, 256, 512) - Model:Alexnet

- Date of testing:June 2017

The test results are shown in the diagram below:

Similar Alexnet tests in Google cloud and AWS showed the following results:

| GPU | Google cloud | AWS |

|---|---|---|

| 1x Tesla K80 | 656 | 684 |

| 2x Tesla K80 | 1209 | 1244 |

| 4x Tesla K80 | 2328 | 2479 |

| 8x Tesla K80 | 4640 | 4853 |

* Provided data was obtained from the following sources:

https://www.tensorflow.org/lite/performance/measurement#details_for_google_compute_engine_nvidia_tesla_k80

https://www.tensorflow.org/lite/performance/measurement#details_for_amazon_ec2_nvidia_tesla_k80

Now let's calculate the cost and processing time of 1,000,000 images on each LeaderGPU®, AWS and Google machine. The calculation was made according to the highest outcome of each machine.

| GPU | Number of images | Time | Cost (per minute) | Total cost |

|---|---|---|---|---|

| 2x GTX 1080 | 1000000 | 5m | € 0.03 | € 0.15 |

| 4x GTX 1080 | 1000000 | 2m 40sec | € 0.02 | € 0.05 |

| 8x GTX 1080 | 1000000 | 1m 46sec | € 0.11 | € 0.19 |

| 4x GTX 1080TI | 1000000 | 2m 5sec | € 0.02 | € 0.04 |

| 2х Tesla P100 | 1000000 | 3m 15sec | € 0.02 | € 0.07 |

| 8x Tesla K80 Google cloud | 1000000 | 3m 35sec | € 0.0825** | € 0.29 |

| 8x Tesla K80 AWS | 1000000 | 3m 26sec | € 0.107 | € 0.36 |

** The Google cloud service does not offer per minute payment plans. Per minute cost calculations are based on the hourly price ($ 5,645).

As can be concluded from the table, the image processing speed in VGG16 model has the highest outcome on 8x GTX 1080 from LeaderGPU®, while:

The initial lease cost at LeaderGPU® starts from as little as € 1.92, which is about 2.5 times lower than in the instances of 8x Tesla® K80 by Google Cloud, and about 3.6 times lower than in instances of 8x Tesla® K80 from Google AWS;

processing time was 38 minutes 53 seconds, which is 1.8 times faster than in the instances of 8x Tesla® K80 from the Google Cloud, and 1.7 times faster than in the instances of 8x Tesla® K80 from Google AWS.

Based on these facts it can be concluded that LeaderGPU® is way more profitable comparing to its competitors. LeaderGPU® allowing to achieve maximum speed at optimal prices. Rent the best GPU with flexible pricing tags at LeaderGPU® today!