Stable Diffusion: Riffusion

In our previous articles, we explored the fascinating capabilities of Stable Diffusion for generating captivating images. However, it’s important to note that this powerful generative neural network has even more to offer.

Riffusion is a Stable Diffusion model for music creation and editing. With Riffusion, you can generate a spectrogram of a desired musical segment and effortlessly transform it into a musical excerpt. Let’s install Riffusion on a LeaderGPU server and try it in action.

Prerequisites

Start by updating the package cache repository and installed packages:

sudo apt update && sudo apt -y upgradeDon’t forget to install NVIDIA® drivers using the autoinstall command or manually, using our step-by-step guide:

sudo ubuntu-drivers autoinstallReboot the server:

sudo shutdown -r nowFor creating a virtual environment, developers suggest using a tool named Anaconda. You can also use venv, which we discussed in the Linux system utilities tutorial. Download Anaconda installation script using curl:

curl --output anaconda.sh https://repo.anaconda.com/archive/Anaconda3-5.3.1-Linux-x86_64.shMake it executable:

chmod +x anaconda.shAnd run:

./anaconda.shAnswer YES to all questions, except the last one (install Microsoft VSCode). Then, re-login to the SSH console and create a new virtual environment with Python v3.9:

conda create --name riffusion python=3.9Activate the new virtual environment:

conda activate riffusionIf you want to use music formats other than wav, it is necessary to install the FFmpeg library set as well:

conda install -c conda-forge ffmpegInstall Riffusion

Clone the Riffusion repository:

git clone https://github.com/riffusion/riffusion.gitOpen the downloaded directory:

cd riffusionLet’s make some changes in the requirements file. This prevents errors with torch compatibility:

nano requirements.txtFind and fix packages versions:

diffusers==0.9.0

torchaudio==2.0.1Save changes and proceed with preparing a virtual environment. The following command installs all necessary packages:

python -m pip install -r requirements.txtFinally, you can open a “playground”. This is a simple web interface that helps you learn more about Riffusion’s features:

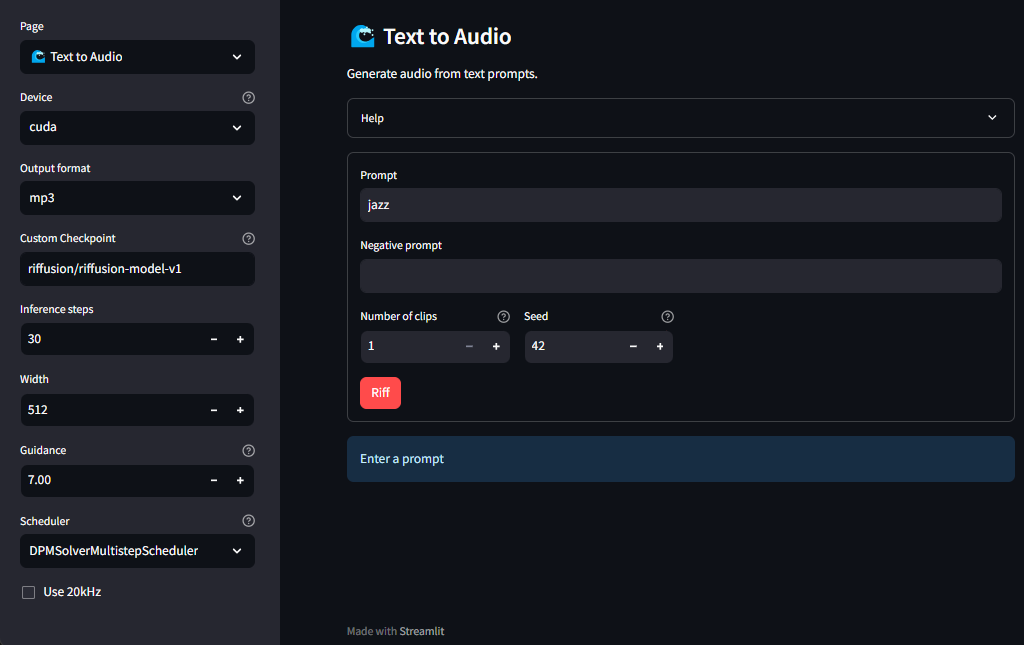

python -m riffusion.streamlit.playgroundOpen your favorite browser and enter the address http://[SERVER_IP]:8501/

Test a playground

Now, you can create music using text prompts and by changing the other parameters:

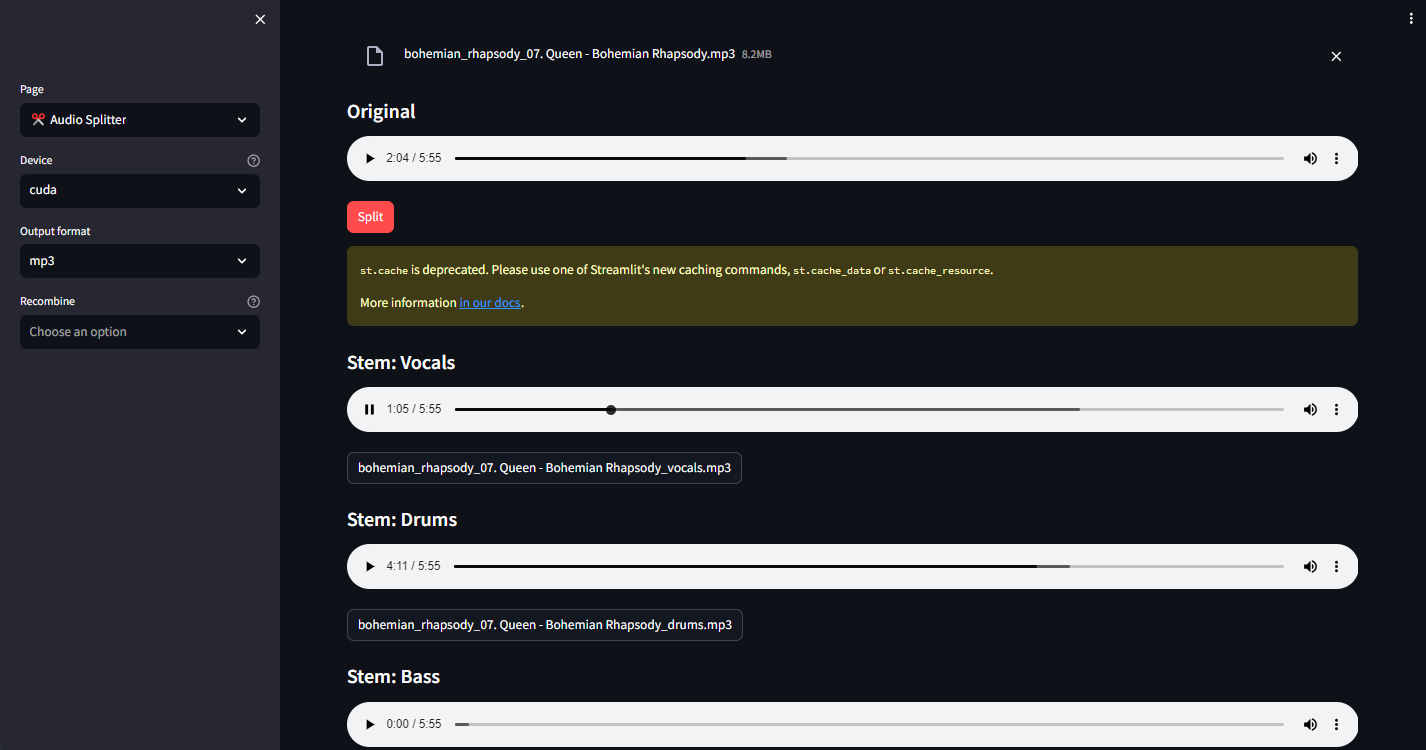

Also, you can do some tricky things, like splitting audio into separate components. For example, you can extract vocal from Bohemian rhapsody by Queen:

Remember, this is merely a single example of how Riffusion can be utilized. By creating your own application, you can achieve significantly more captivating outcomes. Powerful servers by LeaderGPU will take care of the calculations.

See also:

Updated: 04.01.2026

Published: 21.01.2025