Advantages and Disadvantages of GPU sharing

Moore’s Law has remained relevant for nearly half a century. Processor chips continue to pack in more transistors, and technologies advance daily. As technology evolves, so does our approach to computing. The rise of certain computing tasks has significantly influenced hardware development. For instance, devices originally designed for graphics processing are now key, affordable tools for modern neural networks.

The management of computing resources has also transformed. Mass services now rarely use mainframes, as they did in the 1970s and ‘80s. Instead, they prefer cloud services or building their own infrastructure. This shift has changed customer demands, with a focus on rapid, on-demand scaling and maximizing the use of allocated computing resources.

Virtualization and containerization technologies emerged as solutions. Applications are now packaged in containers with all necessary libraries, simplifying deployment and scaling. However, manual management became impractical as container numbers soared into the thousands. Specialized orchestrators like Kubernetes now handle effective management and scaling. These tools have become an essential part of any modern IT infrastructure.

Server virtualization

Concurrently, virtualization technologies evolved, enabling the creation of isolated environments within a single physical server. Virtual machines behave identically to regular physical servers, allowing the use of standard management tools. Depending on the hypervisor, a specialized API is often included, facilitating the automation of routine procedures.

However, this flexibility comes with reduced security. Attackers have shifted their focus from targeting individual virtual machines to exploiting hypervisor’s vulnerabilities. By gaining control of a hypervisor, attackers can access all associated virtual machines at will. Despite ongoing security improvements, modern hypervisors remain attractive targets.

Traditional virtualization addresses two key issues. First issue: it ensures the isolation of virtual machines from one another. Bare-metal solutions avoid this problem as customers rent entire physical servers under their control. But for virtual machines isolation is software-based at the hypervisor level. A code error or random bug can compromise this isolation, risking data leakage or corruption.

The second issue concerns resource management. While it’s possible to guarantee resource allocation to specific virtual machines, managing numerous machines presents a dilemma. Resources can be underutilized, resulting in fewer virtual machines per physical server. This scenario is unprofitable for infrastructure and inevitably leads to price increases.

Alternatively, you can use automatic resource management mechanisms. Although a virtual machine is allocated specific declared characteristics, in fact, only the required minimum is provided within these limits. If the machine needs more processor time or RAM, the hypervisor will attempt to provide it, but can’t guarantee it. This situation is similar to airplane overbooking, where airlines sell more tickets than there are seats available.

The logic is identical. If statistics show that about 10% of passengers don't come on time for their flight, airlines can sell 10% more tickets with minimal risk. If all passengers come, some won’t fit on board. The airline will face minor consequences in the form of compensation but will likely continue this practice.

Many infrastructure providers employ a similar strategy. Some are transparent about it, stating they don’t guarantee constant availability of computing resources but offer significantly reduced prices. Others use similar mechanisms without advertising it. They’re betting that not all customers will consistently use 100% of their server resources, and even if some do, they’ll be in the minority. Meanwhile, idle resources generate profit.

In this context, bare-metal solutions have an advantage. They guarantee that allocated resources are fully managed by the customer and not shared with other users of the infrastructure provider. This eliminates scenarios where high load from a neighboring server’s user negatively impacts performance.

GPU virtualization

Classic virtualization inevitably faces the challenge of emulating physical devices. To reduce overhead costs, special technologies have been developed that allow virtual machines to directly access the server’s physical devices. This approach works well in many cases, but when applied to graphics processors, it creates immediate limitations. For instance, if a server has 8 GPUs installed, only 8 virtual machines can access them.

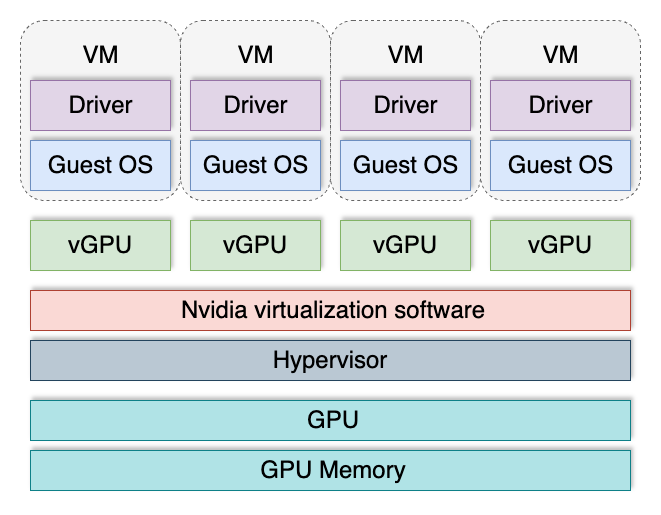

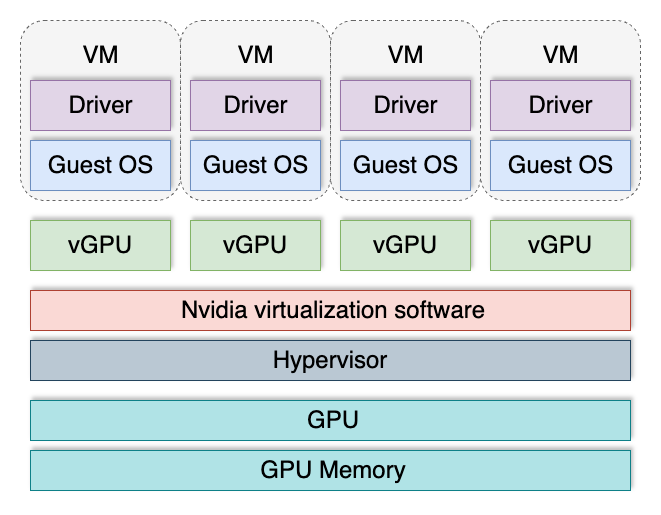

To overcome this limitation, vGPU technology was invented. It divides one GPU into several logical ones, which can then be assigned to virtual machines. This allows each virtual machine to get its “piece of cake”, and their total number is no longer limited by the number of video cards installed in the server.

Virtual GPUs are most commonly used when building VDI (Virtual Desktop Infrastructure) in areas where virtual machines require 3D acceleration. For example, a virtual workplace for a designer or planner typically involves graphics processing. Most applications in these fields perform calculations on both the central processor and the GPU. This hybrid approach significantly increases productivity and ensures optimal use of available computing resources.

However, this technology has several drawbacks. It’s not supported by all GPUs and is only available in the server segment. Support also depends on the installed version of the operating system and the GPU driver. vGPU has a separate licensing mechanism, which substantially increases operations costs. Additionally, its software components can potentially serve as attack vectors.

Recently, information was published about eight vulnerabilities affecting all users of NVIDIA® GPUs. Six vulnerabilities were identified in GPU drivers, and two were found in the vGPU software. These issues were quickly addressed, but it serves as a reminder that isolation mechanisms in such systems are not flawless. Constant monitoring and timely installation of updates remain the primary ways to ensure security.

When building infrastructure to process confidential and sensitive user data, any virtualization becomes a potential risk factor. In such cases, a bare-metal approach may offer better quality and security.

Conclusion

Building a computing infrastructure always requires risk assessment. Key questions to consider include: Is customer data securely protected? Do the chosen technologies create additional attack vectors? How can potential vulnerabilities be isolated and eliminated? Answering these questions helps make informed choices and safeguard against future problems.

At LeaderGPU, we’ve reached a clear conclusion: currently, bare-metal technology is superior in ensuring user data security while serving as an excellent foundation for building a bare-metal cloud. This approach allows our customers to maintain flexibility without taking on the added risks associated with GPU virtualization.

See also:

Updated: 04.01.2026

Published: 23.01.2025